Outcomes

Privacy Dynamics: Learning Privacy Norms for Social Software

Calikli, Gul; Law, Mark; Bandara, Arosha K.; Russo, Alesandra; Dickens, Luke; Price, Blaine A.; Stuart, Avelie; Levine, Mark and Nuseibeh, Bashar (2016). Privacy Dynamics: Learning Privacy Norms for Social Software. In: 2016 IEEE/ACM 11th International Symposium on Software Engineering for Adaptive and Self-Managing Systems, Association of Computing Machinery pp. 47–56.

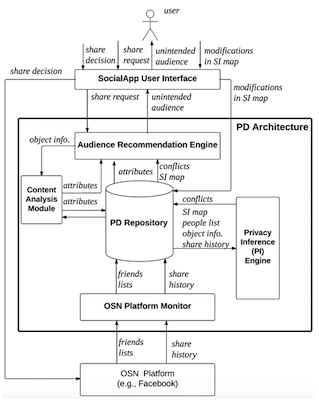

With a growing number of software applications integrating social media platforms, such as Facebook, Twitter or LinkedIn, there is a need to ensure that privacy management capabilities are integrated into software architectures.

Privacy violations are often caused by the misalignment of users' "imagined audience" with the "actual audience" and we model the co-presence of multiple audience groups using social identity theory. We have integrated this model into an adaptive software architecture that provides sharing recommendations to users of social software applications, as well as assisting them to re-configure the groups they explicitly defined. The core of our adaptive architecture uses a model based on social identity theory and is implemented using a logic-based learning system.

Experimental results demonstrate that our approach can learn privacy norms with 50 − 70% specificity, depending on the number of conflicts present, given at least 10 examples of user's sharing behaviour.

Learning to share

Online social networks (OSNs) allow users to define groups of "friends" as reusable shortcuts for sharing information with a number of social contacts. Posting to closed groups may be perceived as a means of controlling privacy as re-sharing would be restricted to only members of the same group.

However, members of a group may still (un)intentionally breach the privacy by copy and paste information into a new post and send it to another group to gain more feedback, exposure, and therefore more social benefit. Hence, privacy in social network applications cannot be limited to just software settings, but it has to incorporate mechanisms for online control that can help users make informed decision on appropriate recipients for their post, based on the privacy awareness of the recipient and the level of sensitivity of the information shared.

Within the scope of the Privacy Dynamic project, we propose a privacy control in social network applications, called learning to share. It is a two pronged approach: firstly, it helps OSN users make informed decisions about suitable audience for their posts, and supports dynamic formation of recipient groups with the objective of increasing the user's social benefit whilst reducing the risk of privacy breach. Secondly, it's a tool supported framework that automates the integration of formal methods and machine learning techniques to help practitioners in OSN domain to develop social network applications that are privacy-aware. The approach dynamically classifies contacts into three categories of friend types – "safe and regular", "safe and occasional" and "risky", based on the level of sensitivity of posts and rigorous online evaluation of the social benefit and privacy risk of sharing posts with each contact. The classification is computed by means of (i) an online learning algorithm and (ii) a privacy model. The Bayesian derived online learning algorithm learns at runtime the transition probabilities of a parametric Markov chain model that captures online interactions between each user-contact pair. These probabilities are then used together with the privacy model to dynamically determine the classification of the contacts per level of sensitivity of a post. The privacy model takes into account user's preferred level of risk averseness and dynamically adapts its inference of privacy risk. A prototype has been implemented within the context of Facebook, and evaluated using both a real user case study and simulated data comprising of a variety of non-privacy preserving and privacy-compliant behavioural patterns. The user case study and the experimental results demonstrate the efficacy of our approach.

Privacy Itch and Scratch: On Body Privacy Warnings and Controls

In the current era of ubiquitous devices (smartphones, fitness trackers, etc.), users give away a lot of private and sensitive information. This information gets collected and shared with unknown entities at any time, without users being aware.

In order to control which of their personal data is being collected, who can collect such data, and when this is allowed, users currently need to go through and pre-set privacy rules for devices/applications they want to use [1]–[3]. Setting privacy rules is a complex and time-consuming process which many people are unwilling to do until their privacy is violated [4], thus increasing the risk of personal information privacy breaches. Even if done, controlling the diffusion of information (and the probable breaches) has become an increasingly daunting task, especially due to the innumerable possibilities of information flow and varying privacy preferences of users across different contexts. When such breaches (highly privacy sensitive or ambiguous, in particular) are about to occur, appropriate methods and interfaces are required to sensitively and actively warn users in real time, enable them to take immediate action when informed, and learn from their responses.

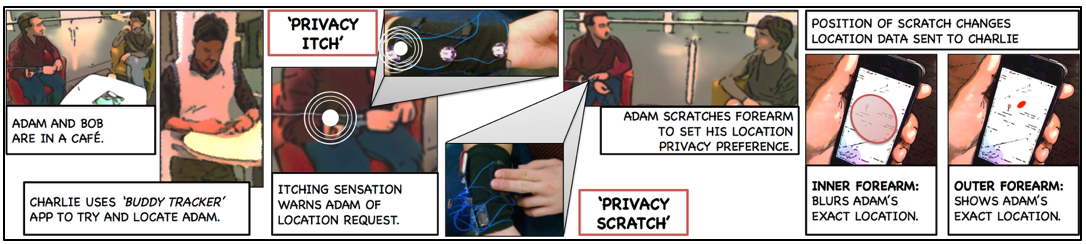

In our work [5], we propose providing users with real time, haptic, personal information privacy warnings as a metaphorical 'privacy itch' at distinct locations on the volar (inner) surface of their forearm. The users can then respond to these warnings with a metaphorical 'privacy scratch' on the sides of their forearm. Figure 1 shows a use case scenario for on body privacy warnings and controls. We perform initial evaluations by implementing haptic prototype: the Privacy Band, and conducting lab based user study.

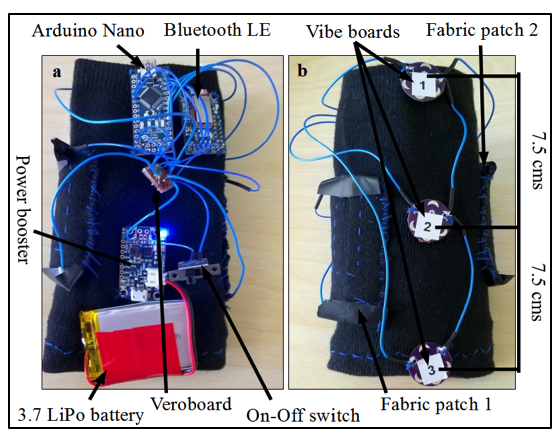

Privacy Band (see figure 2) is an interactive on-body user interface to be worn on forearm. The proposed prototype exploits the natural hybrid geometry of the forearm for intuitive public and private interactions. With the help of an external software application, it can warn the user of a potential privacy breach through haptic feedback of varying intensity at distinct locations on the volar (inner) surface of their forearm, each having some predefined meaning known to the user. It also allows the user to then respond in an easy and intuitive manner through simple scratch/touch/squeeze on the sides of her forearm without requiring any visual attention. This helps user to actively control the release of sensitive information in a suitable, innocuous, continuous and eyes free manner. This device is direct, intuitive, inherently private and predominately non-obtrusive to the user. It can also be used as an effective interface/assistive technology in many other scenarios such as for visually/hearing-impaired people.

Visual Lifelogging in Groups

Blaine A. Price; Avelie Stuart; Gul Calikli; Ciaran McCormick; Vikram Mehta; Luke Hutton; Arosha K. Bandara; Mark Levine and Bashar Nuseibeh Logging you, Logging me: A Replicable Study of Privacy and Sharing Behaviour in Groups of Visual Lifeloggers. Proceedings of the ACM on Interactive Mobile Wearable and Ubiquitous Technologies 1(2) Article 39 (June 2017)

Visual records of people's lives are now common, from wearable cameras to record action sports to social media postings from mobile phones. Visual lifelogging differs slightly from these curated images in that images are captured automatically at regular intervals.

Visual lifelogging has had a variety of uses, from helping early stage dementia patients improve their memory to normal diary keeping. We were inspired by a study of sharing behaviour of individual lifeloggers on a US university campus to try to understand why and in what contexts groups of lifeloggers protect the privacy of others by choosing to share or not share lifelogging images. Our field study had 7 groups (size range 2-6) totalling 26 participants of which 16 were female. Most identified as British (19) and were in their first year of university (24) with about half studying psychology. Groups were either living together (house/flatmates) or on the same degree programme. We wanted to replicate the previous study as much as possible but the hardware and software were no longer available so we built a wearable camera from freely available components and published the plans and software so other could replicate our study.

Our overall observations support the general findings from the previous study that people will generally protect the privacy of strangers by not sharing images where people can be identified. We found that participants inferred in-group contextual norms around both privacy and self-presentation concerns, but more work is needed to automatically detect emerging privacy norms within groups and make recommendations to group members who have not yet customized their privacy settings in line with the group norms.

Bystander awareness of lifeloggers is clearly important as recent work on police body cameras shows that this affects both the wearer and the bystander. Wet deliberately created a very visible design for our camera (bright red, ● flashing LED) to draw attention to the device but more work is needed to strike a balance between design aesthetics for the wearer and the need to make others aware a camera is present. More work is required to unpick these issues and help gather more data to design privacy interfaces that are relevant for both group and single person lifelogging. Our open source hardware and software for conducting visual lifelogging studies should facilitate this future work.

Detecting Social identity

Koschate, M., Dickens, L., Stuart, A., Russo, A., & Levine, M. Detecting group affiliation in language style use. In Preparation for Submission.

Social identity is a widely used concept in the social sciences to understand and predict individuals' attitudes, values and behavior.

Individuals have multiple social identities, each derived from membership of a different social group with its own norms and values. Thus, knowing which identities are salient can tell us about how individuals think and act at particular times. However, social identities are activated by social context, and when the context changes the active social identity can change too. Active social identities and identity shifts are extremely difficult to detect outside the laboratory. This paper proposes that systematic differences in behaviour in real-world settings can be used to detect active social identities and shifts between social identities. By treating language style in written texts as behavior, we provide the first evidence that membership of two different social identities held by the same individual can be reliably differentiated. Representing text with stylistic features, we trained and validated logistic regression models on a large data set (500,000 forum posts). The resulting classifier distinguishes between two widely shared social identities (parent and feminist) with a prediction accuracy of 68% (AUC: 0.73). The model was then applied to data from an online experiment to exclude alternative explanations such as platform, topic, and conversational speech accommodation. Our trained model can distinguish between the two identities in this new context with a comparable accuracy (AUC: 0.68). Together this work shows how we can infer social identities and understand group memberships in a novel, dynamic and context-sensitive way.

Stuart, A., Smith, L. G. E., & Koschate, M. Detection of true social identity in linguistic style even when attempting to write as an outgroup member. In Preparation for Submission.

Recent research has demonstrated the ability to accurately differentiate which social identities people have salient from the style of their writing – an inverse of the typical method of making an identity salient and then observing behaviour. We extend this work by examining whether a social identity can be detected even when participants pretend to hold an outgroup's identity. Participants (Study 1: N=67, Study 2: N=97) wrote short texts about an identity-relevant and an identity-irrelevant topic while thinking of themselves as either holding their actual identity or the outgroup's identity. Logistic regression models using LIWC categories accurately predicted which identity individuals hold (Study 1: 78-94%, Study 2: 73.8-80.7%), irrespective of topic or deception. Moreover, our style-based model outperforms human judges, particularly when the topic was identity-irrelevant and when deception was used. This research solidifies the utility of linguistic identity detection methods and could be used to study infiltration in anonymous forums.

Social identity and privacy

Stuart, A., & Levine, M. (in press). Beyond 'nothing to hide': When identity is key to privacy threat under surveillance. European Journal of Social Psychology.

Privacy is psychologically important, vital for democracy, and in the era of ubiquitous and mobile surveillance technology, facing increasingly complex threats and challenges.

Despite concerns expressed by privacy advocates, surveillance is often justified under a trope that one has 'nothing to hide'. This paper involved conducting focus groups (N = 42) on topics of surveillance and privacy, and using discursive analysis, identifies the ideological assumptions and the positions that people adopt to make sense of their participation in a surveillance society. We found a premise that surveillance is increasingly inescapable, but this was only objected to when people reported feeling misrepresented, or where they had an inability to withhold their identities, or to separate different aspects of their lives. The (in)visibility of the surveillance technology also complicated whether surveillance is constructed as privacy threatening or not – at times reducing privacy threat, but at other times increasing threat. Importantly, people argued that privacy was still achievable so long as they can decontextualize their digital data. We conclude the paper by arguing that those interested in engaging the public in debates about surveillance may be better served by highlighting the identity consequences of surveillance, rather than constructing surveillance as a generalised privacy threat.

Psychology of Privacy Framework

Stuart, A., Bandara, A. K., & Levine, M. A framework for the psychology of privacy. In Preparation for Submission.

Privacy is a key psychological topic that has suffered from historical neglect by the psychology discipline – a neglect that is increasingly consequential in an era of social media connectedness, mass surveillance and the permanence of our electronic footprint.

Despite fundamental changes in the privacy landscape, psychology remains largely unrepresented in debates on the future of privacy. In this paper we conduct a review of the last 12 years in the top 50 psychology journals, revealing a small handful of papers on the psychological dimensions of privacy. By contrast, in disciplines like computer science and media and communication studies, engaging directly with socio-technical developments, interest in privacy has grown considerably. Our review of this literature suggests four domains of interest. These are: sensitivity to individual differences in privacy disposition; a claim that privacy is dialectical; a claim that privacy is inherently contextual; and a suggestion that privacy is as much about psychological groups as it is about individuals. We argue that psychology has much to say about these four domains and present a framework to enable psychologists to build a comprehensive model of the psychology of privacy in the digital age. This framework illustrates how psychologists could undertake studies of privacy, as well as bridge the evidence gaps that prevent an integrated theory of privacy from being formalised.

Packrat

Packrat is a personal data store built using the Django web framework. It provides plugins for for several health and well being services and apps. It gives users the ability to combine and analyse data from several sources.

What is Packrat?

Packrat is a personal data store built using the Django and Django REST frameworks. It provides plugins for for several health and well being services and apps such as Fitbit, Beddit and Jawbone.

How is Packrat being used?

Packrat is currently being used to conduct several studies as part of the EPSRC funded projects Privacy Dynamics, Monetize Me and STRETCH. The data collected by Packrat is being used to study topics ranging from group privacy behaviours to post-operative recovery in knee replacement patients.

Why was Packrat created?

Packrat was originally created to enable researchers at The Open University to study group privacy and sharing behaviours as part of Privacy Dynamics. Since that original study it has been expanded to include more data sources.